Social Media

Social Media

Industry Challenges in Social Media AI

- Extremely high volumes of real-time content

- Identifying toxicity, abuse, and policy violations

- Handling diverse languages, dialects, and cultural nuances

- Managing fast-evolving harmful content patterns

- Multi-modal moderation across text, images, audio & video

How rProcess Supports the Social Media Ecosystem

We transform massive user‑generated content streams into structured, AI‑ready datasets that power smarter moderation and better user experiences.

Annotation Services for Social Media

- Toxicity detection

- Hate speech & harassment labeling

- Policy violation tagging

- Sentiment and emotion analysis

- Chatbot and virtual assistant training

- Violence, self-harm & dangerous acts identification

- Profanity & harmful audio signal detection

- Nudity & sensitive visual content tagging

- Multi-language audio moderation

- Video behavior categorization for guideline enforcement

Why Choose rProcess?

Trusted Partner for Global Social Platforms

We power moderation pipelines with high-quality datasets used to train large-scale safety and content classification models.

High Sensitivity Accuracy for Nuanced Violations

Our teams detect subtle forms of toxicity, harassment, and guideline breaches across text, image, audio, and video.

Scalable 24/7 Moderation Annotation Workflows

We support continuous, real-time content ingestion across global time zones.

Secure, Confidential & Consistent Through Multi‑Tier QA

Robust privacy controls and rigorous QA ensure safe and consistent labeling across cultural contexts.

Social Media Case Studies

E-commerce platforms frequently encounter policy violations, including unauthorized brand usage and inappropriate use of sensitive content. These violations exposed platforms to significant legal risks and reputational damage.

- Developed a context-aware moderation system for accurate content review.

- Detected and rejected policy-violating listings efficiently.

- Applied rules for intellectual property misuse.

- Flagged content sensitive to cultural or religious sentiments.

- Ensured compliance with platform governance policies, reducing risk.

- Religious Sensitivity: Blocked misuse of religious symbols on floor mats.

- Intellectual Property: Prevented. unauthorized use of Disney IP.

- Efficiency: Improved moderation accuracy, reduced legal exposure, and strengthened platform trust.

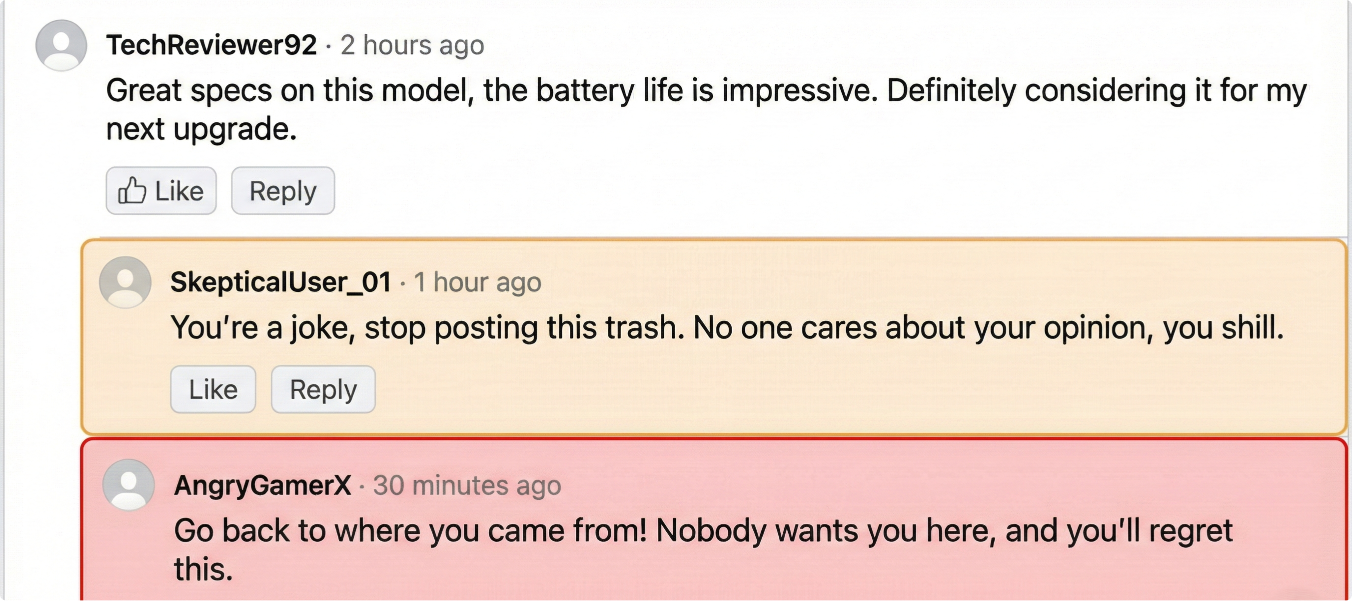

E-commerce and social platforms face risks from user comments that contain hate speech, harassment, spam, or inappropriate language. Unchecked, these can harm platform reputation, violate policies, and expose the platform to legal challenges.

- Automated text moderation for policy violations.

- Categorized comments (Profanity, Hate Speech, Public Shaming, etc.).

- Severity-based tagging (Borderline / Not a Violation).

- Enforced rules against insults, threats, discrimination, and data disclosure.

- Ensured civil, fact-based, and policy-compliant moderation.

- Reduced incidents of harassment and discriminatory language in comments.

- Minimized legal and reputational risks for the platform.

- Improved comment quality and maintained a safe, respectful user environment.

User-uploaded videos may contain harmful, misleading, or sensitive material such as real-world violence, graphic content, or inappropriate themes. These can negatively impact user safety, brand reputation, and compliance with platform policies.

- Reviewed YouTube and user-generated videos against community guidelines.

- Classified content into severity levels (appropriate, slightly inappropriate, inappropriate, extreme).

- Flagged real-world violence footage while distinguishing it from acceptable simulated/gameplay violence.

- Applied consistent labeling to reduce misclassification and ensure fair moderation.

- Accurately identified harmful or sensitive video content.

- Reduced risk of policy violations and brand damage.

- Improved trust and safety for users through consistent guideline enforcement.