rAnnotate

Transform Raw Data Into Intelligent Training Datasets with Expert-Led Precision

Milestones & Metrics

Multi-industry Expertise across automotive, sports, agriculture, retail, and construction.

Accuracy

4-tier QA: automated validation, expert annotation, peer review, final audit.

3D point cloud annotation with 97%+ spatial accuracy.

Specialists

Skilled professionals across autonomous driving, sports, agriculture, aquaculture.

Delivery Rate

Reliable turnaround with optimized workflows.

Annotation Modalities We Support

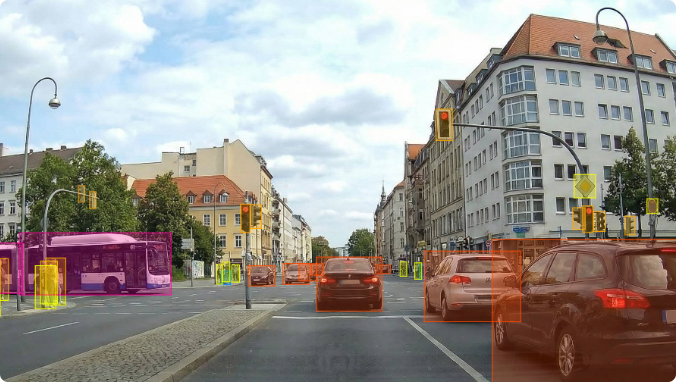

Image & Video Annotation

Transform raw visual data into accurately labeled training datasets that fuel high-performing computer vision models. Our expert annotation teams specialize in everything from object detection and semantic segmentation to complex scene analysis, ensuring pixel-perfect precision across all camera modalities and image formats.

- Image & Video Data Annotation Services - Annotation Types:

2D Object Detection

Bounding boxes with tight-fit methodology, polygon segmentation, and instance segmentation.

Semantic & Instance Segmentation

Pixel-level classification for roads, vegetation, objects, meat quality, fish bodies.

Action Recognition

Sports events, construction activities, worker safety, behavioral analysis.

Keypoint & Pose Estimation

15-point anatomical mapping (fish, human pose), player tracking, skeletal annotation.

Object Tracking

Temporal tracking with unique IDs, ball trajectory, player movement, equipment utilization.

Quality Assessment

4-tier image quality classification (Poor, Fair, Good, Excellent) for facial recognition datasets.

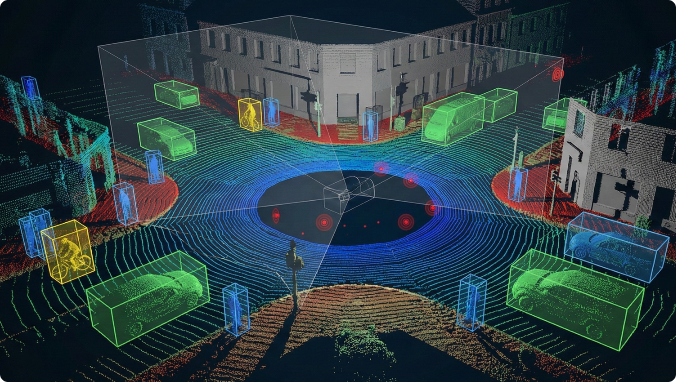

LiDAR & Sensor Fusion Annotation

Unlock the full potential of 3D point cloud data with expert LiDAR annotation services. Our specialists label millions of 3D points with precision, enabling accurate depth perception, object detection, and spatial understanding for autonomous systems. We seamlessly integrate LiDAR data with camera and RADAR inputs for comprehensive sensor fusion annotation.

- LiDAR & Sensor Fusion Data Annotation Services - Annotation Types:

3D Bounding Cuboid Annotation

Precise 3D boxes with dimension-based classification, tight bounding following "single box per object" rules, ISO-standardized cuboids.

Object Tracking & Trajectory

Temporal tracking with unique IDs, smooth trajectories, motion physics validation.

Multi-Sensor Fusion

Synchronized annotation across:

- LiDAR + Camera (3D cuboids projected onto images)

- LiDAR + RADAR (spatial + velocity)

- Triple Fusion (LiDAR + Camera + RADAR + Thermal) for complete 360° perception

Semantic Segmentation

Point cloud classification for surfaces (roads, sidewalks, vegetation), objects, infrastructure.

2D-to-3D Mapping

Mapping 2D bounding boxes to 3D representations for comprehensive data utility.

RADAR & Geospatial Annotation

Enable precise object detection and perception understanding in challenging conditions with expert RADAR and geospatial annotation. RADAR data excels in adverse weather and low-visibility scenarios, while geospatial annotation unlocks insights from satellite and aerial imagery. Our specialists handle the unique challenges of RADAR signal interpretation and large-scale geographic data labeling.

- RADAR & Geospatial Data Annotation Services

RADAR Annotation Services:

- RADAR + Camera Fusion - Synchronized annotation across RADAR detections and camera images.

- Signal Classification - Target vs. clutter discrimination, interference rejection

- Weather Condition Classification - Environmental factors affecting RADAR performance.

- Geospatial Annotation Services:

- Land Use & Land Cover

- Building & Infrastructure Detection

- Change Detection

- Precision Agriculture

- Disaster Assessment

- Environmental Monitoring

- Urban Planning

Industries We Serve

Why Choose rProcess?

Trained specialists across 10+ industries including automotive, agriculture, construction, logistics, robotics, retail, aquaculture, and environmental mapping.

We deliver domain-informed annotations that enhance model accuracy — not generic, one-size-fits-all labeling.

From pixel-level segmentation to 3D cuboids and multi-modal fusion, rProcess provides full-spectrum annotation across:

- Images & video

- LiDAR

- RADAR

- Thermal/IR

- Aerial & satellite imagery

We follow ISO-standard conventions and client-defined rules:

- Single box per object

- Tight bounding rules

- Minimum LiDAR/RADAR point rules

- Consistent IDs and coordinate frames

We excel at annotating challenging data involving:

- Night, fog, rain, glare, low-light

- Occlusions

- Vulnerable road users

- Cluttered agricultural or industrial environments

We deliver:

- 1M+ images monthly

- 50K+ video hours

- 100K+ LiDAR frames

- 10,000+ sq km geospatial imagery

- Expert review

- Automated validation

- Model-assisted verification

- Domain audits

- Continuous feedback

We ramp 50+ trained annotators in 48–72 hours.

Supports JSON, CSV, XML, PCD, LAS/LAZ, BIN, PLY, ECEF, UTM, vehicle frames.

rAnnotate Case Studies

Autonomous driving models require accurate segmentation of LiDAR point clouds to identify both static and dynamic objects within a defined range of interest. The challenge lies in annotating complex scenes with multiple object types (vehicles, traffic signs, vegetation, obstacles, etc.) while addressing data noise (reflections, blooming, scan errors) and ensuring high-quality outputs at scale.

- Scaled workforce from 20 → 140 annotators.

- 20-day structured training & onboarding.

- Offline LiDAR annotation tool for objects & noise categories.

- 1:4 QA-to-annotator ratio for quality control.

- Segmented objects accurately within 100–250m RoI.

- Maintained error rate at 18.5 errors/task (better than <20 target).

- Delivered 100,000+ annotated frames for autonomous driving models.

- Achieved 98%+ dataset reliability through multi-level QA.

- Established a scalable pipeline supporting rapid workforce expansion and consistent delivery.

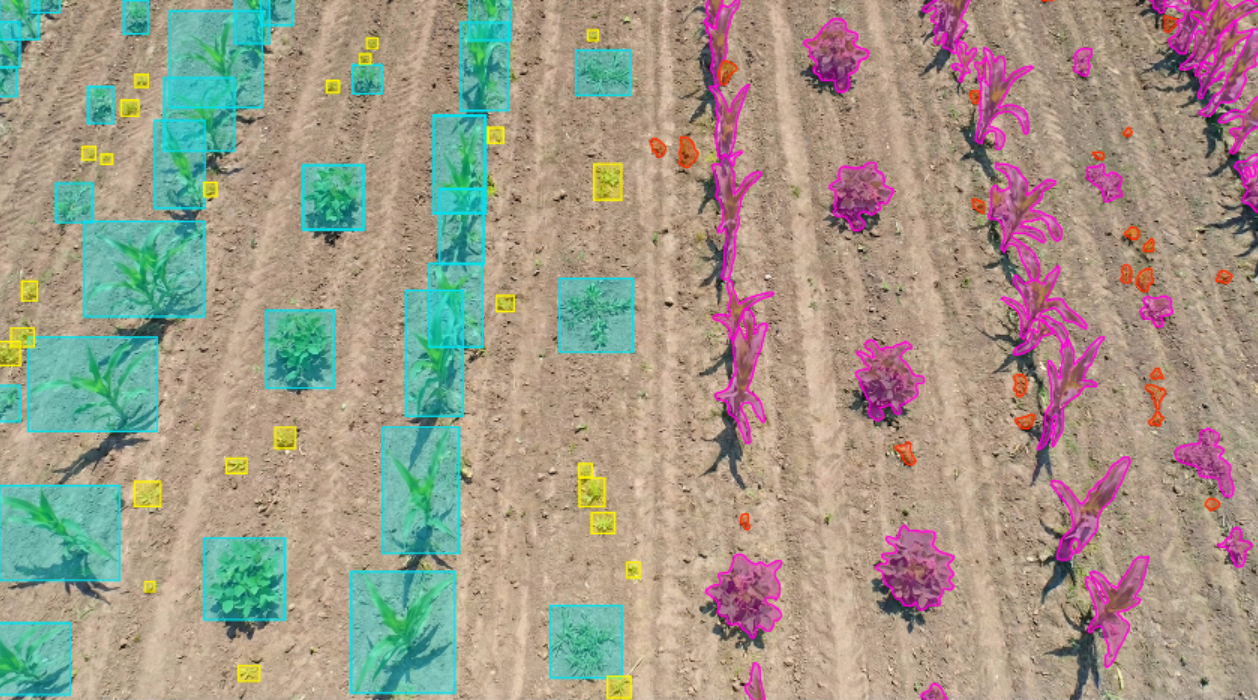

Efficient farming requires distinguishing crops from weeds to optimize chemical usage. Traditional blanket spraying methods waste resources, increase costs, and damage the environment. There was a need for high-quality labeled datasets to train AI-powered See & Spray technology that targets weeds precisely.

- Labeled farm images to differentiate Soybean and Corn crops from weeds.

- Applied segmentation (15 mins per image) and bounding box annotation (3 mins per image) to ensure accurate classification.

- Created structured datasets enabling machine learning models to guide smart sprayers.

- Successfully annotated 500,000 images.

- Delivered high-accuracy segmentation and bounding box datasets.

- Enabled AI-driven spraying systems to reduce chemical use, cut costs, and support sustainable farming.

rProcess partnered with a leading AI and robotics company to revolutionize recycling economics. The project focused on creating high-quality annotated datasets to train machine vision models for automated waste segregation in Municipal Solid Waste (MSW), Construction & Demolition (C&D), E-Waste, and battery recycling.

- Developed a large dataset with precise segmentation and labeling of waste objects.

- Applied AI, computer vision, and smart sorting system workflows.

- Delivered facility-wide data insights for material purity, performance, and waste characterization.

- Supported design of robotics systems for targeted segregation of high-value commodities.

- Enabled the creation of machine learning models for accurate waste classification.

- Annotated 350 diverse datasets from industries such as manufacturing, C&D, electronics waste, and MSW.

- Supported automated robotics for safe, efficient, and precise segregation.

- Improved recovery of recyclable materials, reducing landfill dependency.

- Provided scalable annotation workflows for multiple waste domains.

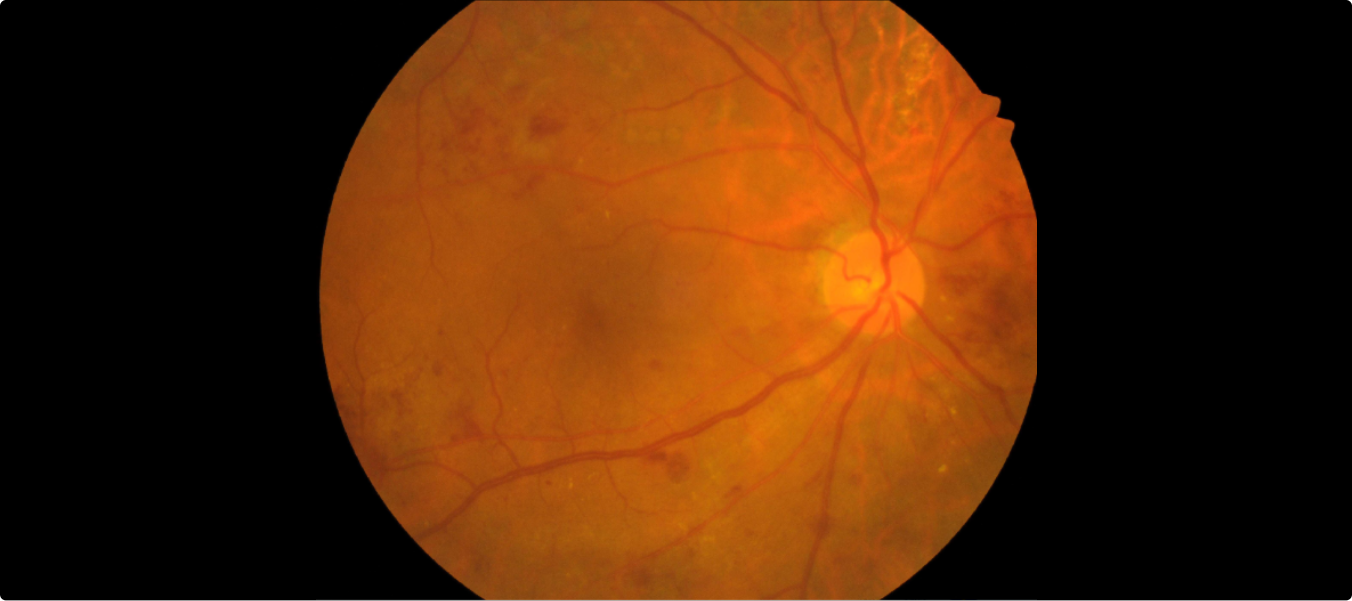

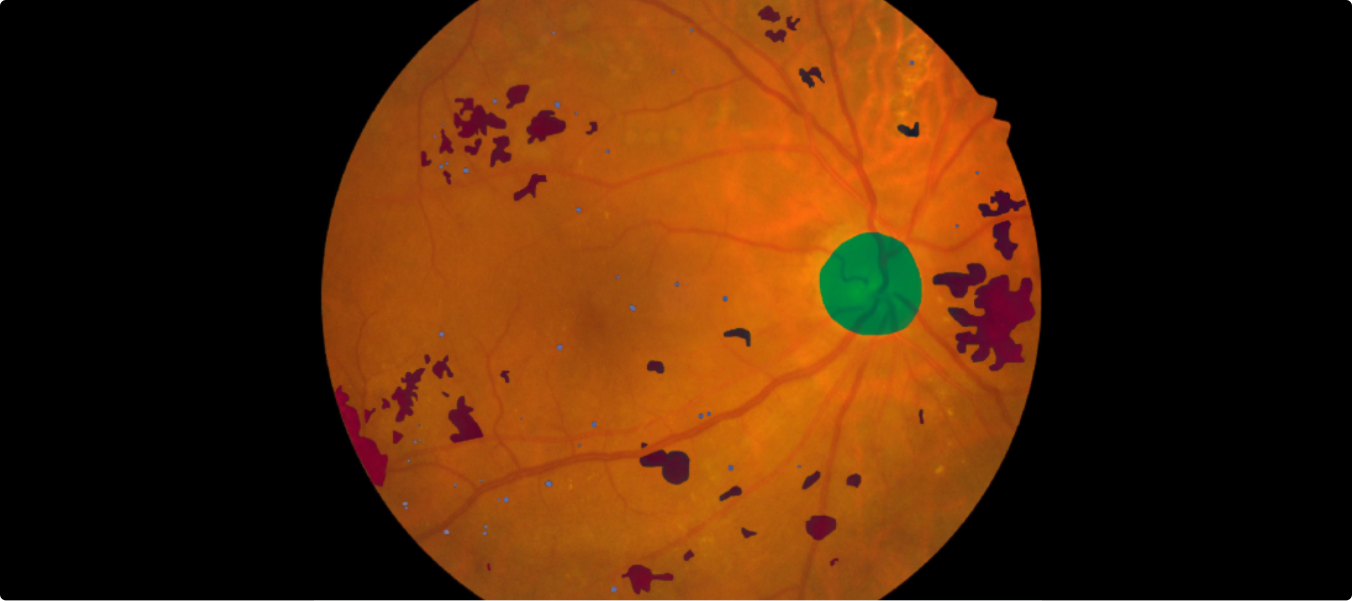

Accurate annotation of retinal fundus images is critical for detecting and classifying Diabetic Retinopathy (DR) and Diabetic Macular Edema (DME). The challenge lies in ensuring pixel-level precision to enable reliable model training for ophthalmology applications.

- Deployed an on-demand data annotation model for high accuracy.

- Performed pixel-level labeling of lesions to capture fine details.

- Supported the client’s goal of advancing AI-powered diagnostic solutions in healthcare.

- Annotated ~1,000 retinal images, ensuring precise classification of DR and DME lesions.

- Delivered a high-quality annotated dataset for ophthalmology AI models.

- Enabled accurate detection and segmentation of DR and DME.

rAnnotate - FAQs

What types of data does rAnnotate support?

rAnnotate supports annotation for images, videos, LiDAR point clouds, RADAR signals, geospatial and satellite imagery, drone footage, and fused multi-sensor datasets across industries such as automotive, retail, agriculture, sports, construction, and more.

Which annotation techniques do you offer?

How do you ensure the accuracy of annotations?

We use a four-tier QA workflow combining automated checks, expert review, peer quality control, and final audit. This framework consistently delivers more than 97 percent accuracy across data types and project sizes.

Can rAnnotate handle large-scale enterprise projects?

Can we start with a pilot project?

Yes. Most clients begin with a 100 to 500 sample pilot to validate quality, workflows, and communication. Once approved, we transition seamlessly into full production.

Do you work on client-provided annotation tools?

Yes. We are fully tool-agnostic and have experience with most major annotation platforms in the market. We also work with selected platform partners to offer bundled models that combine tooling and annotation services.

How do you handle data security and privacy?

Do you support LiDAR–Camera–RADAR sensor fusion?

Yes. We synchronize and annotate multi-sensor datasets to enable object tracking, 3D–2D alignment, depth validation, motion estimation, and robust scene understanding for autonomous driving, robotics, and industrial systems.

What industries does rAnnotate specialize in?

Key sectors include agriculture, automotive and ADAS, construction, retail and e-commerce, waste management, sports analytics, and healthcare imaging.

How is pricing structured?

Can you manage ongoing multi-month or multi-year labeling pipelines?

Yes. We act as an extension of your ML and data teams, supporting continuous data ingestion, re-labeling, versioning, and dataset optimization throughout your AI lifecycle.

How do you handle revisions and corrections?

How do you handle revisions and corrections?

Yes. We frequently help clients create or refine ontologies, labeling rules, edge-case guidelines, and reference documentation to ensure clarity, consistency, and scalability across teams and tools.

Ready to Power Your AI with High-Quality Training Data?

Join 100+ global enterprises across automotive, sports, agriculture, healthcare, retail, construction, and more who trust rAnnotate for mission-critical annotation projects.