Automotive

Automotive

Industry Challenges in Automotive AI

- Safety‑critical perception models requiring flawless accuracy

- Increasing reliance on multi-sensor data (Camera, LiDAR, RADAR, IMU)

- Need for accurate detection of vulnerable road users, vehicles, road markings & signage

- Dense, dynamic environments with high annotation complexity

- Edge-case scenarios like night, fog, rain, glare, occlusion

How rProcess Supports the Automotive Ecosystem

Our experts annotate multi-modal datasets with pixel-level precision, delivering the accuracy required to train state-of-the-art automotive AI models.

Annotation Services for Automotive

- Semantic & instance segmentation

- Lane & road marking

- Traffic sign & signal annotation

- Pedestrian, vehicle & object detection

- Occlusion, depth & visibility tagging

- Tracking across video sequences

- 3D cuboids, segmentation & classification

- Drivable area & free space mapping

- Multi-sensor alignment (LiDAR–Camera–RADAR)

- Object tracking across 3D sequences

- Spatial reasoning for autonomous navigation

- Parking assistance

- RADAR point interpretation for low-visibility perception

- Geospatial mapping & road network annotation

- Long-range obstacle detection

- Road boundary & feature mapping

Why Choose rProcess?

Expertise in ADAS & Autonomous Vehicle Workflows

Our teams bring deep experience across ADAS and AV perception pipelines, ensuring precise, safety‑ready annotation for complex road, traffic, and driving environments.

Scalable Workforce for Continuous AV Data Pipelines

We rapidly scale to support large, always‑on data streams from global fleets—delivering high‑volume annotation across diverse driving scenarios without delays.

Customized QA for Safety‑Grade 97%+ Accuracy

Our multilayered QA framework is engineered for mission‑critical automotive datasets, consistently achieving 97%+ accuracy required for safe model deployment.

Multi‑Sensor Expertise with Certified, Secure Operations

With strong capabilities across Camera, LiDAR, RADAR, and Audio modalities, we deliver unified, production‑ready datasets within a secure, compliant annotation environment.

Automotive Case Studies

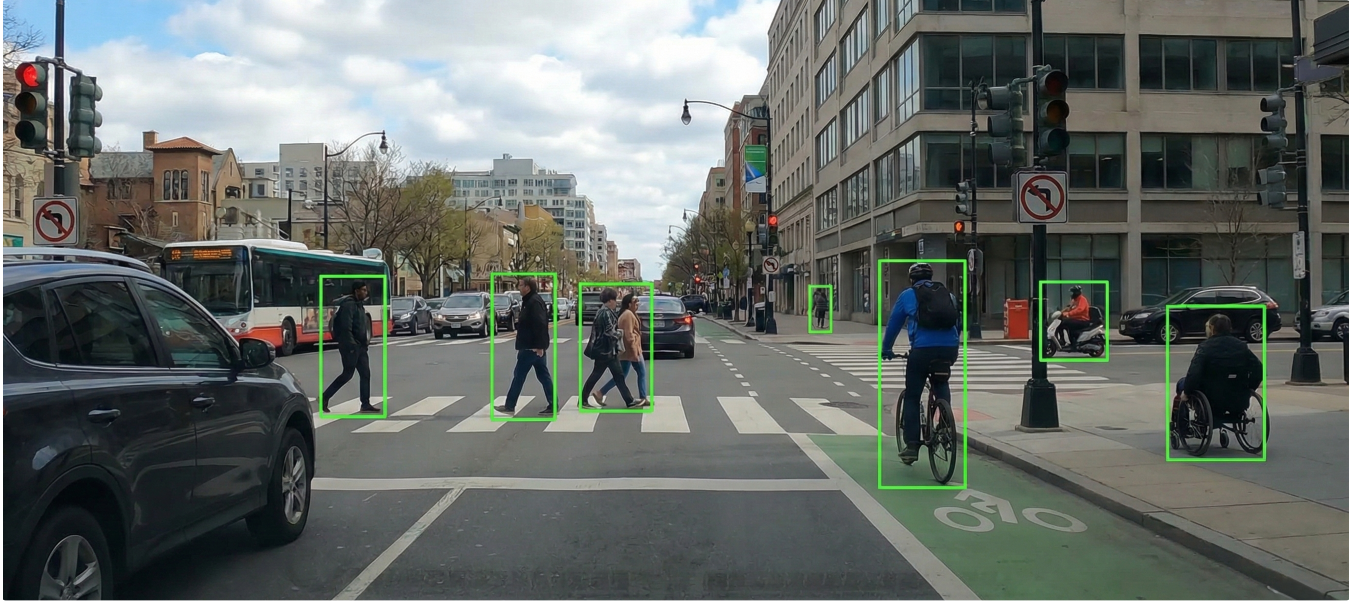

Autonomous driving systems require high-quality annotated datasets to detect and classify Vulnerable Road Users (VRUs) such as pedestrians, animals, ridable vehicles, and mobility devices. Capturing these objects in dynamic vehicle environments is complex, with challenges like occlusion, orientation, and diverse object attributes.

- Annotated VRUs in vehicle-captured environments using camera + LiDAR data.

- Processed dynamic sequences with up to 40 frames per sequence (images + point clouds).

- Built structured annotation for 33 VRU classes (human, animal, ridable vehicles, mobility devices).

- Captured 13 object attributes (e.g., occlusion, orientation, cargo presence).

- Established a multi-level QC pipeline (Self QC → Shadow QC → QA team with tool validation).

- Scaled workforce from 45 to 250 users within 2 months (30 onboarded per week).

- 9-month project duration with sustained high throughput.

- 33 VRU classes & 13 attributes consistently annotated across datasets.

- Quality maintained with multi-level QC, ensuring accuracy across thousands of frames.

- Delivered a scalable, certified annotation workforce capable of handling large autonomous driving projects.

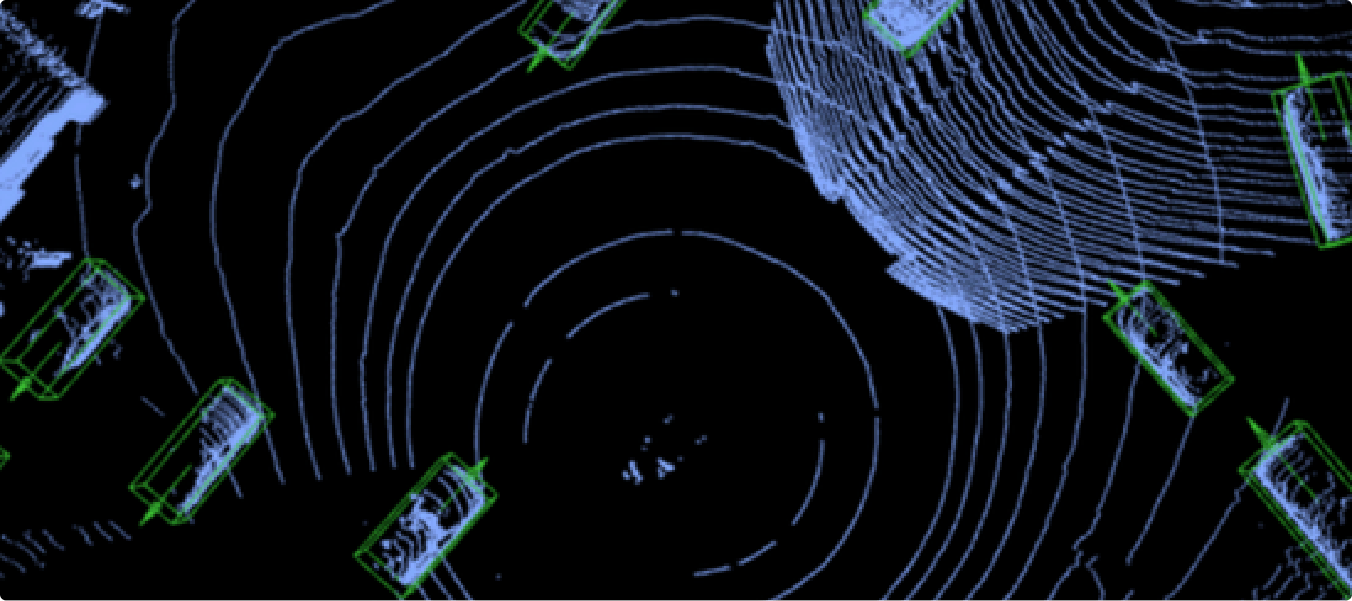

Autonomous driving systems require precise identification of both static and dynamic objects in real-world environments. Annotating complex multi-sensor data (2D images and 3D point clouds) across diverse object classes and attributes is highly challenging, demanding skilled annotators, scalability, and stringent quality control to ensure reliable datasets.

- Structured 3D annotation for camera & LiDAR data.

- Scaled workforce to ~300 trained annotators.

- 3-week training & certification program.

- Clear workflows and annotation guidelines.

- Two-tier QC (Self-QC + Dedicated QC) for accuracy.

- Project duration: Ongoing since November 2024.

- Workforce scaled to ~300 users (starting with 42).

- Delivered consistent annotation across 29 object classes and 23 attributes.

- Ensured high accuracy through 100% file validation before submission.

- Established a robust and scalable annotation pipeline supporting autonomous driving datasets.

Autonomous driving models require accurate segmentation of LiDAR point clouds to identify both static and dynamic objects within a defined range of interest. The challenge lies in annotating complex scenes with multiple object types (vehicles, traffic signs, vegetation, obstacles, etc.) while addressing data noise (reflections, blooming, scan errors) and ensuring high-quality outputs at scale.

- Scaled workforce from 20 → 140 annotators.

- 20-day structured training & onboarding.

- Offline LiDAR annotation tool for objects & noise categories.

- 1:4 QA-to-annotator ratio for quality control.

- Segmented objects accurately within 100–250m RoI.

- Maintained error rate at 18.5 errors/task (better than <20 target).

- Delivered 100,000+ annotated frames for autonomous driving models.

- Achieved 98%+ dataset reliability through multi-level QA.

- Established a scalable pipeline supporting rapid workforce expansion and consistent delivery.

Developing autonomous driving models requires precise understanding of vehicle behavior in real-world conditions. Capturing actions such as turning, stopping, and interactions with surroundings is complex, demanding accurate video annotation and context-rich descriptions to train reliable AI systems.

- Annotated vehicle actions (moving, stopping, turning, interactions) with high precision.

- Used advanced video annotation tools for accurate tracking and labeling.

- Generated detailed, context-aware captions reflecting driver’s perspective and scene dynamics.

- Completed ~6,000 video annotation tasks.

- Delivered high-quality annotated datasets for vehicle behavior analysis.

- Enabled improved training of autonomous driving models.

- Enhanced accuracy in detecting real-world driving scenarios and interactions.